Sober Up

Addressing Generative AI’s Limitations is a Serious Business

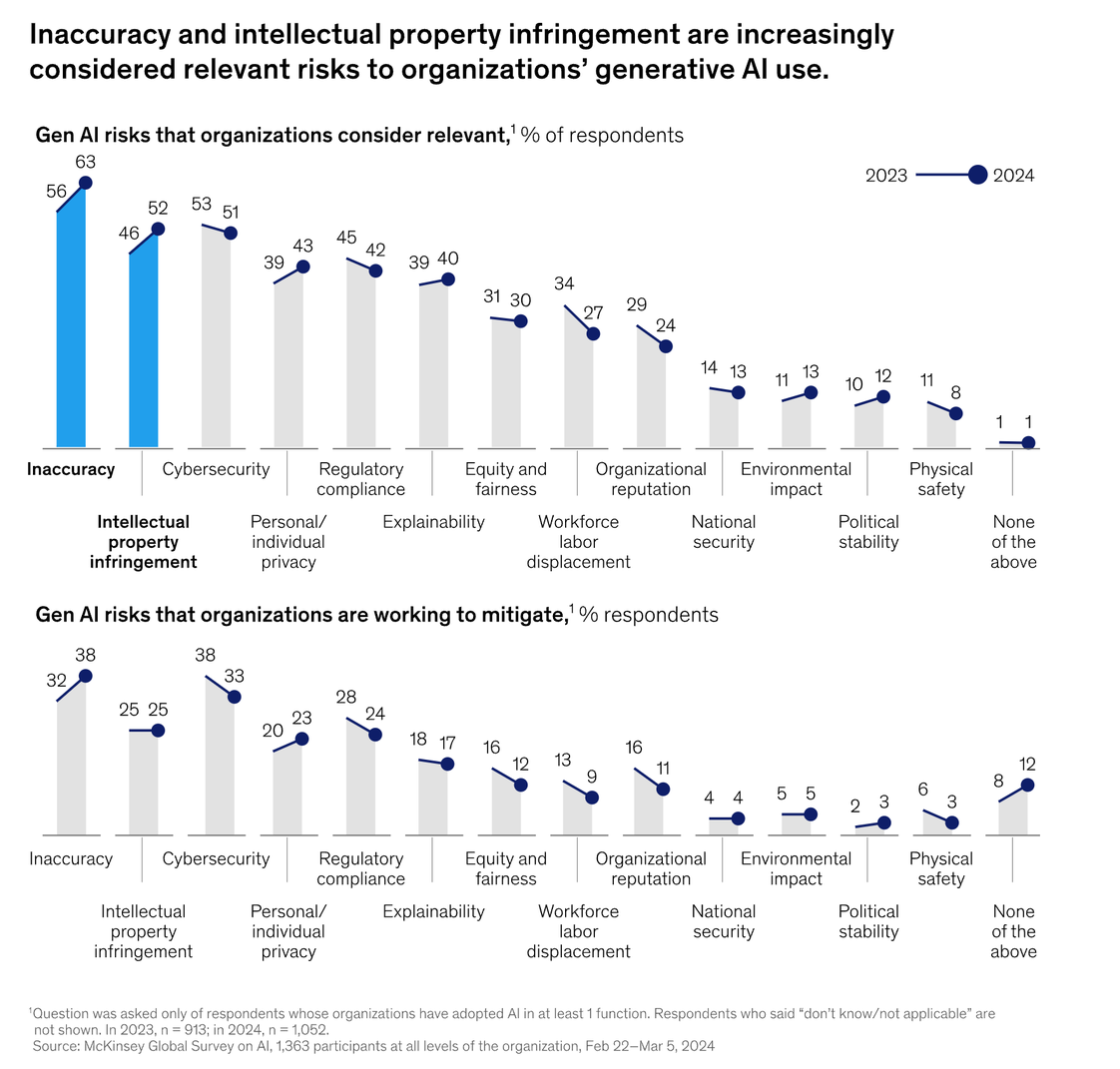

Is the honeymoon phase of Generative AI (GenAI) over? Many seem to think so. As enterprises move from euphoric discovery to more pragmatic and strategic deployment of the technology, advancing past the pilot phase or proof-of-concept has proved to be a challenge. McKinsey & Co. found that only 11% of the companies they polled have adopted GenAI at scale. Another survey by Lucidworks, a San Francisco-based enterprise search solutions provider, shows that just a quarter of GenAI initiatives have reached full implementation so far. What is setting enterprises back from tapping into the vast potential of GenAI?

Executive buy-in and resources aside, the most cited challenges are associated with the inherent limitation of AI itself. Anyone who has any experience with AI chatbots knows that they routinely present inaccurate information as truth – a condition known as “hallucinations.” However, don’t think of their tendency for confabulations as “a bug” that needs to be fixed. Today’s large language models (LLMs) are designed to create “creative” content. As hyper-advanced autocomplete tools, they are not trained to spit out facts, but plausible strings of words. According to GenAI startup Vectara, existing chatbots hallucinate anywhere between 3% and 22% of the time. The prevalence makes it challenging for organizations to implement GenAI across their workflows – from solving customer inquiries to optimizing inventory and creating reports.

Another factor that is causing enterprises to proceed cautiously with the adoption of GenAI is data security. Leakage of sensitive or proprietary information can occur when used for model training or simply entered into the chat window as prompts. Imagine a hedge fund trader accidentally accessing non-public, material information of a publicly listed company from a response returned from a GenAI chatbot. Similarly, a lawyer could inadvertently breach client confidentiality when using GenAI to draft legal briefs or contracts. Even within the same organization, there are complications with regard to unauthorized data access because role-based or granular access controls are not inherently supported by GenAI models.

As daunting as the challenges may seem, we have seen a growing crop of companies offering solutions to help enterprises deploy AI safely and effectively.

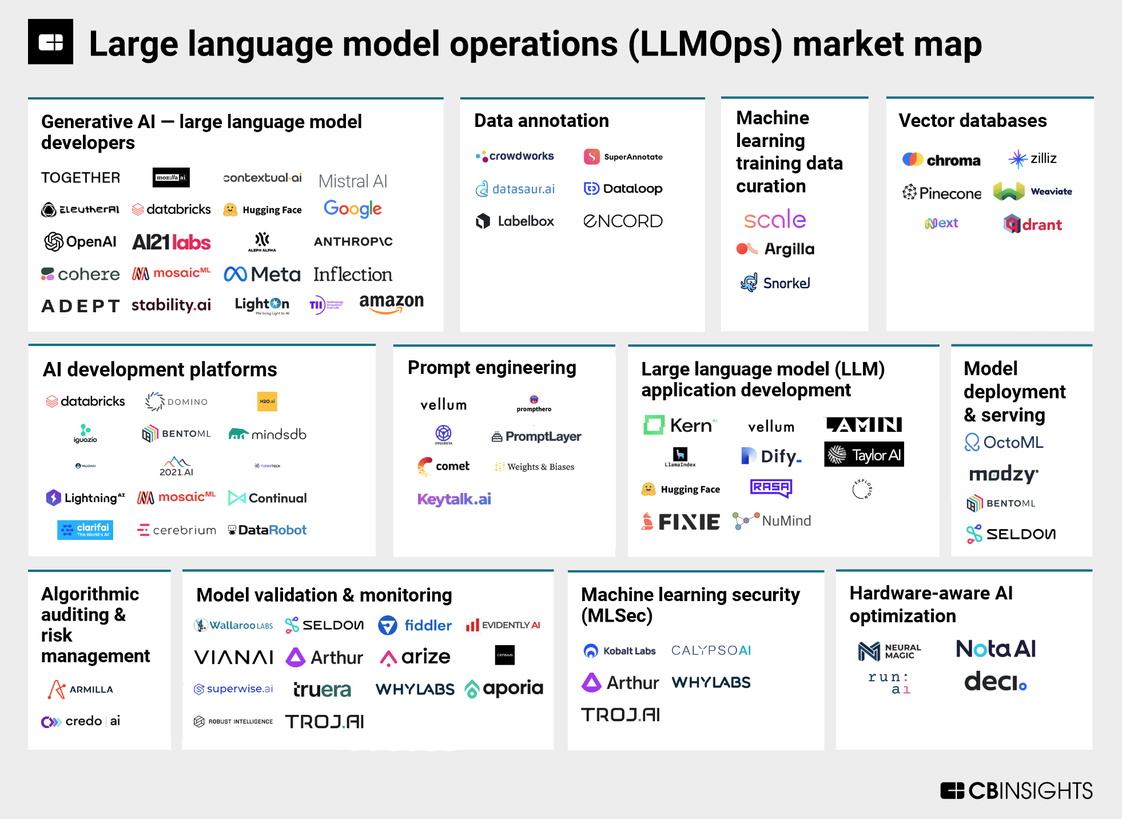

Take Retrieval-Augmented Generation (RAG)-as-a-Service. RAG as a technique can redirect an underlying LLM to reference and retrieve information from up-to-date and predetermined sources, thereby enhancing the accuracy, relevance and explainability of AI-generated output. It is generally less costly and time-consuming than “fine-tuning” a model for a specific use case, as the latter requires computing power and advanced AI expertise. Nevertheless, applying RAG comes with its own complexity. The process typically involves the integration and maintenance of at least one vector database as well as a data ingestion pipeline that evolves with the knowledge corpus. In other words, data and software engineers will need to orchestrate a myriad of APIs, data formats and integration points to make RAG possible.

We believe RAG-as-a-Service, or RaaS, which provides an end-to-end solution across all aspects of a RAG architecture, is well positioned to capture a considerable portion of AI investment spend. An obvious reason is that companies of all kinds will grow to appreciate the need to weave domain-specific GenAI models into their enterprise applications, be it for operational efficiency or harnessing data for better decision-making. For instance, 80% of large enterprise finance teams will rely on internally managed and generative AI models trained with proprietary business data by 2026, says Gartner, a global technology consulting firm.

Aside from RaaS, which helps reduce the complexity of scaling AI, the growing deployment of industry-focused or customized GenAI frameworks is also expected to drive demand for model observability platforms. With the ability to detect data drift, biases and other anomalies, these platforms offer real-time monitoring and diagnostics that are essential to ensuring the integrity and performance of LLM-based applications.

Another area that will grow in tandem with the rise of enterprise AI is guardrails for data security. Sitting between inputs, the model and outputs, these rule-based filters could deny prompts that contain undesirable content and redact sensitive information for privacy protection. Guardrail solutions that could strike a balance between effectiveness, low latency and cost will be sought-after.

Is the honeymoon phase of GenAI over? Taking the long view, we believe we are now entering a new phase in AI adoption. From data annotation, vector databases to prompt engineering, it’s obvious that the market for large language model operations (LLMOps) is growing rapidly and dynamically with greater specialization and sophistication (see the market map from CB Insights above). Such development is a strong indicator that GenAI has found its rhythm.